The Viral Deepfake Video: A Stirring Response to Ye’s Antisemitism

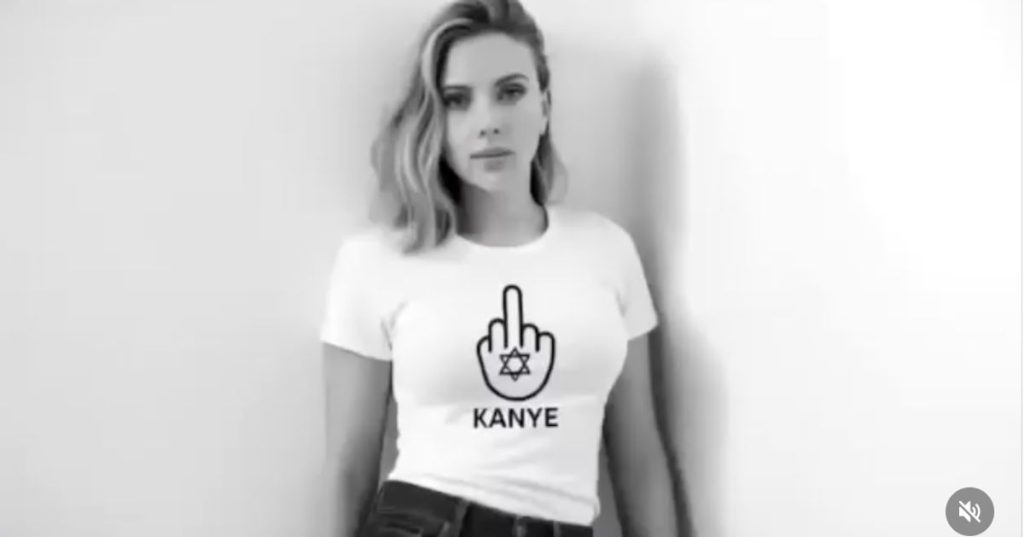

A stirrings and controversial video went viral on Tuesday night, appearing to show Jewish celebrities like Jerry Seinfeld, Drake, Scarlett Johansson, and even Simon and Garfunkel, along with tech CEOs such as Mark Zuckerberg and OpenAI’s Sam Altman, giving a collective middle finger to Ye, the artist formerly known as Kanye West. The black-and-white clip, set to the upbeat Jewish folk song “Hava Nagila,” featured the celebrities wearing white T-shirts with a Star of David inside a hand gesture flipping the bird, with “Kanye” written below. The video ended with a fake Adam Sandler extending a real middle finger to Ye and urging viewers to “Join the Fight Against Antisemitism.”

But here’s the catch: none of the celebrities featured in the video actually participated or gave their consent. The video was entirely created using AI, a sophisticated deepfake designed to look real. Despite its convincing nature, it was eventually revealed as a clever forgery, sparking both shock and debate about the ethics of AI-generated content.

The video gained traction quickly, with many users sharing it without realizing it was fake. Melissa Gilbert, an actor from “Little House on the Prairie” and a former Screen Actors Guild president, shared the video on Threads, where it racked up over 8,000 likes, 1,800 retweets, and 2,400 shares before she deleted it 19 hours later. Even after some users pointed out its AI origins, others expressed confusion about how to distinguish it from real footage. “How can you tell it’s AI?” one commenter asked. “The fabric of the shirts moves, there are correct shadows? I don’t know how to tell.” The video also included deepfake cameos from other Jewish celebrities, such as David Schwimmer and Lisa Kudrow from “Friends,” further adding to its deceptive realism.

Scarlett Johansson Calls for AI Regulation

Scarlett Johansson, one of the celebrities featured in the deepfake, was quick to respond to the video. In a statement to People magazine, the actor expressed her concerns about the misuse of AI, particularly in amplifying hate speech. “I am a Jewish woman who has no tolerance for antisemitism or hate speech of any kind,” Johansson said. “But I also firmly believe that the potential for hate speech multiplied by A.I. is a far greater threat than any one person who takes accountability for it. We must call out the misuse of A.I., no matter its messaging, or we risk losing a hold on reality.”

Johansson’s statement highlights the dual nature of the video: while it aimed to address antisemitism, its creation and dissemination raised significant ethical questions about the role of AI in spreading misinformation. The video’s misleading nature serves as a stark reminder of the challenges posed by deepfakes and the need for stricter regulations on their use.

Why the Video Was So Believable

Experts who study AI and online misinformation say the video’s high production quality made it particularly convincing. Julia Feerrar, an associate professor at Virginia Tech, noted that features like the video’s grayscale, quick cuts, and blank background made it harder to spot telltale signs of generative AI. “Many of the video’s features make it really hard to spot the kinds of tell-tale signs we’ve come to expect from generative AI,” Feerrar explained.

However, upon closer inspection, some flaws became apparent. For example, at around the 00:28 mark, the fake Lenny Kravitz’s fingers appeared distorted and merged into themselves—a common issue with AI-generated images, which often struggle with rendering fingers and hands correctly. Despite these subtle giveaways, few people took the time to scrutinize the video before sharing it. “I would have never noticed that without spending a lot of time and actively looking for it,” Feerrar said.

The video’s believability was also bolstered by contextual clues that aligned with real-world events. Many of the celebrities featured have been vocal about rising antisemitism in recent years, making it plausible that they would band together to address the issue. The inclusion of Hollywood insider Melissa Gilbert further lent credibility to the video, as her endorsement implied that it was an authentic response from the Jewish community.

Emotional Appeal and the Spread of Misinformation

The video’s rapid spread can also be attributed to its emotional appeal. Antisemitism is a deeply personal and serious issue for many people, and the video tapped into that sentiment by presenting a unified, defiance response to Ye’s hateful rhetoric. According to Amanda Sturgill, a journalism professor at Elon University and host of the “UnSpun” podcast, the video’s emotional resonance made it inherently “likable” and shareable.

“I think all the ‘likes’ suggests this is a really emotional issue for audiences,” Sturgill said. “It’s the kind of thing that people would want to believe is real, and that has a way of short-circuiting one’s usual shenanigan detection abilities.”

The video also capitalized on widespread dislike for Ye, whose controversial remarks and far-right leanings have made him a polarizing figure. As one expert noted, few people today would disagree with former President Barack Obama’s 2009 assessment of Ye as a “jackass”—a sentiment that has only intensified in light of his recent behavior.

The Broader Implications of Deepfakes

The viral video is more than just a cleverly crafted deepfake; it highlights a growing concern about the role of AI in misinformation and the erosion of trust in media. As deepfakes become increasingly sophisticated, the ability to distinguish real content from fake becomes more challenging.

According to a study conducted by Lee Rainie, director of the Imagining the Digital Future Center at Elon University, 45% of American adults say they’re not confident in their ability to detect fake photos, videos, and audio files. This lack of confidence underscores the need for greater digital literacy in the age of AI.

Rainie emphasized that the default setting for any media consumer should be skepticism. “Emerging digital tools are getting so much better at faking images, audio files, and video that the default setting for any media consumer needs to be this: be careful, be skeptical, and be doubtful,” he warned.

Feerrar also suggested relying on contextual clues, such as verifying the appearance of people and places through other sources, as a practical step to identify deepfakes. For example, she noticed