Introduction: Understanding Meta’s Shift in Content Moderation

In the ever-evolving landscape of social media, Meta (formerly Facebook) has recently made headlines with a significant shift in its content moderation strategy. The company, which owns major platforms like Facebook and Instagram, encountered an issue where graphic and violent content appeared on users’ Instagram Reels feeds, prompting an apology and a hasty fix. This incident coincides with Meta’s decision to end its third-party fact-checking program and adopt a community-driven approach, inspired by platforms like Elon Musk’s X. This change marks a pivotal moment in how Meta addresses content moderation, raising questions about the future of information management on its platforms.

Meta’s Apology: A Response to Graphic Content

Meta’s recent apology came after numerous Instagram users reported seeing violent and gore-filled content in their Reels feeds, labeled as "sensitive content." The company acknowledged the mistake, stating they had fixed an error that led to the inappropriate recommendations. This incident is a stark reminder of the challenges in content moderation, especially in maintaining a balance between user freedom and protection from harmful material. Meta has long strived to shield users from disturbing content, typically removing graphic material under its policies. The error, though swiftly corrected, underscores the complexities of content curation on vast platforms.

Shift in Policy: From Third-Party Fact-Checking to Community Moderation

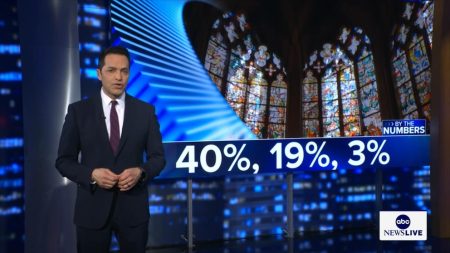

Meta made a significant policy change in January, discontinuing its third-party fact-checking program in favor of a community-driven system. This shift mirrors the approach of Elon Musk’s X platform, emphasizing user participation in content moderation. Previously, Meta relied on external fact-checkers to combat misinformation. However, the decision to end this program reflects a move towards decentralization and user empowerment. This change is part of a broader strategy to redefine Meta’s role in content governance, aiming to foster a sense of community responsibility among users.

The Community Notes Program: A New Era of User Participation

Central to Meta’s new strategy is the Community Notes program, designed to leverage user input for content moderation. Similar to X’s approach, this system allows users to contribute notes that provide context or corrections to posts. These notes can then be viewed by others, affording users more control over the content they encounter. The program aims to cultivate a collaborative environment where users actively participate in maintaining the integrity of the platform. By involving the community, Meta seeks to address the ever-evolving nature of online content and the challenges of scale in moderation.

Challenges Ahead: Balancing Moderation and Misinformation

The transition to a community-driven system presents several challenges. While user participation can enhance moderation, it also risks introducing biases and varying quality of contributions. Ensuring that notes are accurate and unbiased is crucial, as misleading or partisan notes could exacerbate misinformation. Meta must implement safeguards to prevent the spread of false information while empowering users. Additionally, the company faces the task of educating users on effective moderation practices, highlighting the responsibility that comes with this new community-driven approach.

Conclusion: The Implications of Meta’s New Direction

Meta’s shift towards community-driven moderation signals a significant change in how it manages content, with implications for both users and society. While the approach offers benefits like increased user involvement and personalized moderation, it also poses risks related to misinformation and bias. The success of this strategy will depend on Meta’s ability to balance user empowerment with robust safeguards. As the digital landscape continues to evolve, Meta’s direction may set a precedent for other platforms, shaping the future of online content governance. This shift is a reminder of the ongoing challenges in maintaining a safe and informed online environment.