What OpenAI’s New Image Generator Means for Our Future

The Dawn of a New Creative Era

We’re living through a remarkable moment in technological history, one that feels both exhilarating and unsettling in equal measure. OpenAI’s latest image generation technology represents far more than just another clever algorithm or tech industry novelty—it’s a fundamental shift in how we create, consume, and think about visual content. For the first time in human history, anyone with an internet connection and a few words can conjure detailed, sophisticated images that would have taken skilled artists hours, days, or even weeks to produce. This isn’t science fiction anymore; it’s our present reality, and it’s forcing us to reconsider everything we thought we knew about creativity, artistry, and the unique value of human imagination.

The technology works through a process that seems almost magical to those of us who remember when computers could barely render simple shapes without crashing. You type a description—perhaps something like “a Victorian-era detective examining holographic evidence in a neon-lit cyberpunk alley”—and within seconds, the AI produces a fully realized image that captures not just the literal elements you requested, but the mood, atmosphere, and aesthetic coherence of a scene that never existed until that moment. It’s learned from millions of images across the internet, absorbing patterns, styles, artistic techniques, and visual relationships in ways that allow it to generate entirely new works that feel simultaneously familiar and unprecedented. The implications of this capability extend into virtually every corner of our visual culture, from advertising and entertainment to education, journalism, and personal expression.

Democratization or Disruption? The Double-Edged Sword

On one hand, there’s something genuinely democratic and liberating about this technology. For generations, visual creation has been gated behind technical skill, expensive equipment, and years of training. If you wanted a professional-looking illustration for your small business, you needed either artistic talent or the budget to hire someone who had it. If you were a writer who could envision the perfect cover for your novel but couldn’t draw to save your life, you were stuck relying on your ability to communicate your vision to someone else, hoping they’d interpret it correctly. Now, those barriers have largely evaporated. Students working on presentations, entrepreneurs launching startups, authors self-publishing books, activists creating awareness campaigns—all of these people suddenly have access to visual creation tools that would have seemed impossibly powerful just a few years ago.

However, this democratization comes with consequences that we’re only beginning to understand. Professional illustrators, graphic designers, and digital artists are watching nervously as clients increasingly turn to AI-generated images instead of commissioning human-created work. The economics are brutally simple: why pay hundreds or thousands of dollars for a custom illustration when you can generate something “good enough” for a fraction of the cost? This isn’t just about money changing hands; it’s about the viability of creative careers that people have spent years building. The counterargument—that these tools will simply augment human creativity rather than replace it—feels increasingly hollow to artists watching job opportunities evaporate. We’re witnessing a technological shift that may fundamentally restructure creative industries, and unlike previous automation that primarily affected manufacturing and routine cognitive work, this one is coming for jobs we thought were uniquely, safely human.

The Question of Authenticity and Truth in the Visual Age

Perhaps even more troubling than the economic disruption is what this technology means for our relationship with visual truth. Photography has always carried a special weight in human culture because it represented captured reality—even when we knew images could be manipulated, there was still a fundamental connection between a photograph and an actual moment in time when light hit a sensor or film. That connection is now severed. We’re entering an era where seeing truly is no longer believing, where any image might be entirely fabricated, and where the visual “evidence” we’ve relied on for journalism, legal proceedings, historical documentation, and personal memory can no longer be trusted at face value.

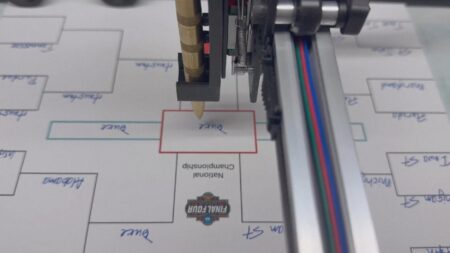

The technology can generate photorealistic images of events that never happened, people who don’t exist, and scenarios crafted entirely from imagination or, more sinister, from deliberate intent to deceive. Deepfakes have already shown us the tip of this iceberg, but image generation technology takes it further, making it trivially easy for anyone to create convincing visual misinformation. Imagine a political attack ad showing a candidate at an event they never attended, or viral images of disasters that never occurred causing real-world panic, or historical “evidence” fabricated to support false narratives. We’re going to need entirely new frameworks for visual verification, new forms of digital watermarking and authentication, and perhaps most challenging of all, a complete reeducation of the public about how to evaluate visual information critically. The phrase “pics or it didn’t happen” is becoming meaningless in a world where pics can be generated for things that definitely didn’t happen.

Creative Collaboration or Creative Obsolescence?

The question of what happens to human creativity in this new landscape is complex and doesn’t have simple answers. Some artists are embracing these tools as collaborators, using them to rapidly prototype ideas, overcome creative blocks, or generate base images that they then refine with human judgment and skill. In this optimistic vision, AI becomes like any other tool in the creative toolkit—powerful and useful, but ultimately guided by human intention and elevated by human refinement. After all, photography didn’t destroy painting; it changed it, pushed it in new directions, and freed it to explore abstraction and expression in ways it might not have otherwise. Perhaps AI image generation will do something similar, pushing human artists toward the kinds of creativity that machines still can’t replicate: the deeply personal, the culturally specific, the emotionally resonant work that comes from lived experience rather than pattern recognition.

But there’s a darker possibility that’s harder to dismiss: that we’re creating a technology that can do “good enough” creative work so efficiently and cheaply that the market for human creativity simply collapses in many sectors. Not because AI art is better—it often isn’t, at least not yet—but because it’s faster and cheaper, and in our economy, that frequently wins. We might be heading toward a future where human-created visual art becomes either a luxury good for the wealthy who can afford to commission “authentic” human work, or a hobbyist pursuit that people engage in for personal satisfaction rather than income. The middle-class creative professional—the illustrator taking on commercial projects, the designer working for small and medium businesses—might become increasingly rare. This isn’t just about individual careers; it’s about whether we’ll maintain the diverse creative ecosystems that have produced the visual culture we value, or whether we’ll slide into a kind of creative monoculture where most commercial imagery comes from variations on the same AI training data.

Navigating the Legal and Ethical Minefield

The legal frameworks surrounding AI image generation are still in their infancy, trying to catch up with technology that’s advancing at breakneck speed. Who owns an AI-generated image? Is it the person who wrote the prompt, the company that created the AI, or no one because it wasn’t created by a human mind? Current copyright law in most jurisdictions is built on the assumption that creative works are produced by human authors, and AI-generated content doesn’t fit neatly into these categories. Then there’s the thornier question of training data: these AI systems learned by analyzing millions of images scraped from the internet, many of which were copyrighted works created by human artists who never consented to having their work used to train a commercial AI system. Is this “fair use,” or is it mass copyright infringement at a scale we’ve never seen before? Several lawsuits are working their way through courts right now, and their outcomes will shape the future of the technology.

Beyond the legal questions are ethical ones that law alone can’t answer. Should there be restrictions on what kinds of images can be generated? Most platforms have implemented filters to prevent the creation of explicit content, violent imagery, or pictures of real people without consent, but these guardrails are imperfect and constantly being tested. There’s also the question of bias: AI systems trained on internet images inevitably absorb and reproduce the biases present in that training data, whether that’s unrealistic beauty standards, racial and gender stereotypes, or cultural assumptions. When we let these systems generate the images that will fill our visual landscape, we’re potentially amplifying and legitimizing these biases. Addressing these issues requires not just technical solutions but ongoing human oversight, ethical frameworks, and a willingness to prioritize social good over pure capability. Unfortunately, in the rapid-fire competitive environment of AI development, those considerations often take a back seat to getting the most impressive technology to market as quickly as possible.

Preparing for a Visually Transformed Future

So where does all this leave us? The technology isn’t going away—that genie is firmly out of the bottle—so the question becomes how we adapt to this new reality in ways that preserve what’s valuable while mitigating the harms. We need robust media literacy education that teaches people, from childhood onward, to approach all images with healthy skepticism, to ask questions about source and authenticity, and to understand how AI generation works. We need authentication systems built into cameras and platforms that can help distinguish captured images from generated ones, though this will be an ongoing arms race as generation technology continues to improve. We need legal frameworks that protect artists’ rights while enabling beneficial uses of the technology, a balance that will require nuanced policymaking rather than blanket bans or unrestricted freedom.

Perhaps most importantly, we need to have honest conversations about what we value in visual creativity and why. If we decide that human authorship matters—that there’s something important about images created by people with experiences, perspectives, and intentions—then we need to build economic and cultural systems that reflect that value rather than simply defaulting to whatever’s cheapest and most efficient. This might mean labeling AI-generated content clearly, creating markets specifically for human-created work, or finding new models for compensating creativity in an age of abundant machine generation. The future of visual culture isn’t predetermined; it will be shaped by the choices we make right now about how to integrate this powerful technology into our lives and societies. OpenAI’s image generator is a wake-up call, forcing us to decide what kind of visual future we want to live in while we still have the agency to influence that outcome. The images of tomorrow are being generated today, and we all have a stake in ensuring they reflect the best of human creativity and values rather than just the impressive but potentially dystopian capabilities of our machines.